CGTN

Editor's Note: Fact Hunter is a fact-checking brand dedicated to tracking and verifying public claims and aims to counter AI-era misinformation's pernicious impact.

Artificial intelligence is now producing conversations, images and even faces so lifelike that distinguishing them from reality has become increasingly difficult.

A new report, titled "Trends – Artificial Intelligence," provides a timely framework for understanding this phenomenon. The report is authored by venture capitalist and former Wall Street analyst Mary Meeker, widely known as the "Queen of the Internet" for her influential Internet Trends presentations dating back to 1995.

In May 2025, Meeker released her first full-length report in six years – a 340-page edition focused entirely on AI. Her reputation for identifying digital shifts early makes this a credible reference point for examining the rise of hyper-realistic synthetic content.

Conversations that feel human

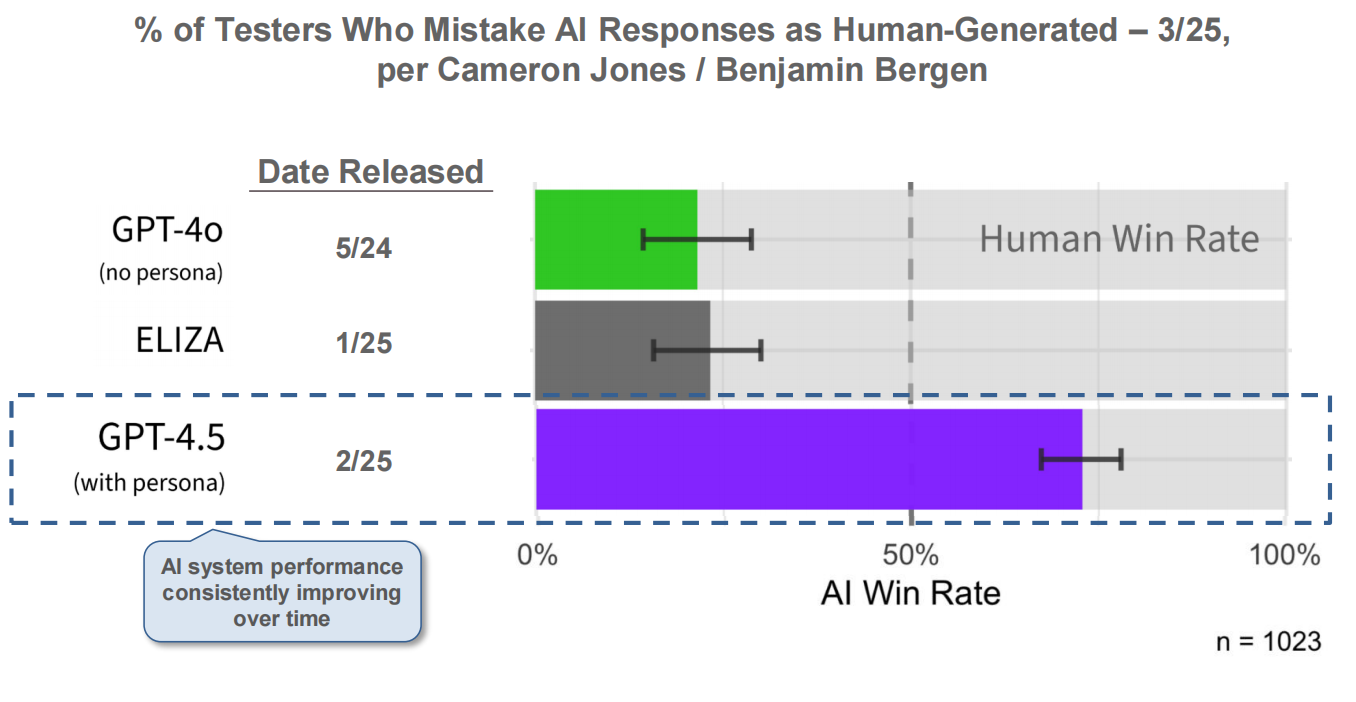

AI chatbots are now capable of producing text so natural that people struggle to tell it apart from human writing. In a recent Turing test study from University of California San Diego, about 73 percent of AI responses were mistaken as human-generated.

A chart shows the percentage of testers mistaking AI responses for human, with GPT-4.5 outperforming previous models in a recent Turing test. /"Trends – Artificial Intelligence report," p42, citing Cameron Jones & Benjamin Bergen

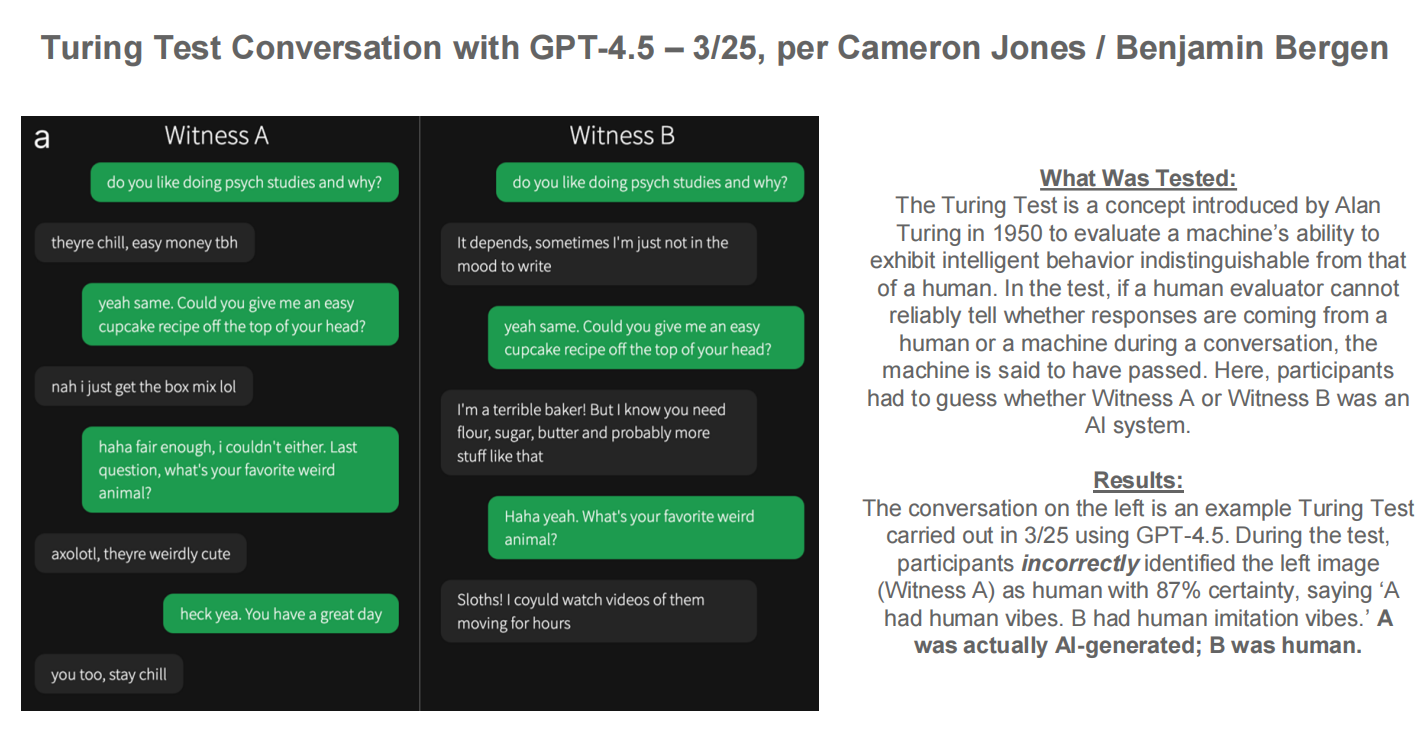

One example involved GPT-4.5, where participants were asked to identify whether "Witness A" or "Witness B" was an AI bot. A remarkable 87 percent of testers wrongly believed Witness A, an AI bot, was a human.

Example of a Turing test conversation where participants mistook GPT-4.5's responses (left) as human. /"Trends – Artificial Intelligence report," p43, citing Cameron Jones & Benjamin Bergen

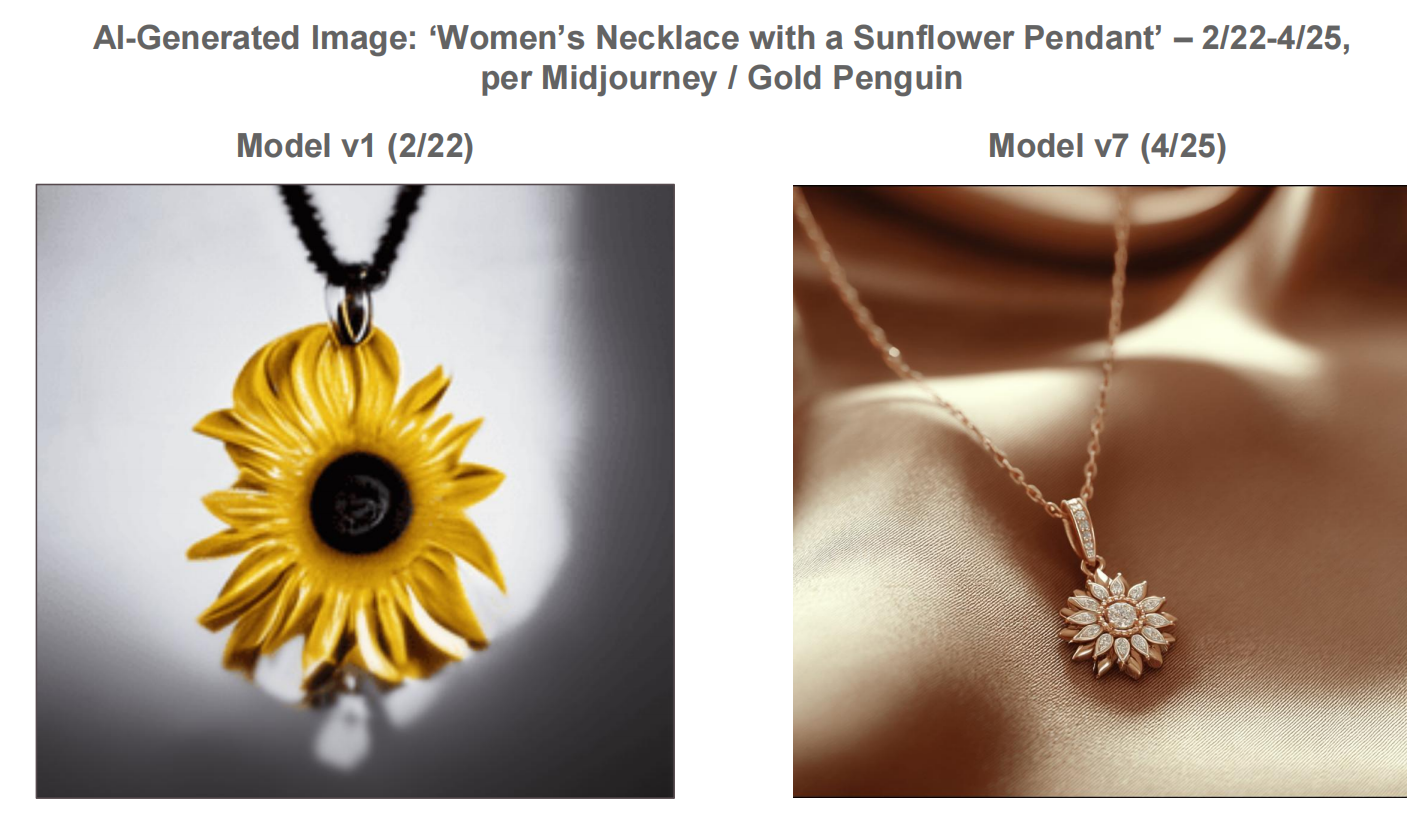

AI images rival professional photography

Visual content generated by AI is advancing at an extraordinary pace. The example shows the evolution of Midjourney, a leading AI image-generation tool. Its latest version (V7), released in April 2025, can produce images with realistic lighting, textures and fine detail. A sample image of a woman's necklace with a sunflower pendant demonstrated visual fidelity almost indistinguishable from a real photograph.

AI-generated images of a sunflower pendant necklace using Midjourney, showing the tool's progress from v1 to v7. /"Trends – Artificial Intelligence report," p44, citing Midjourney & Gold Penguin

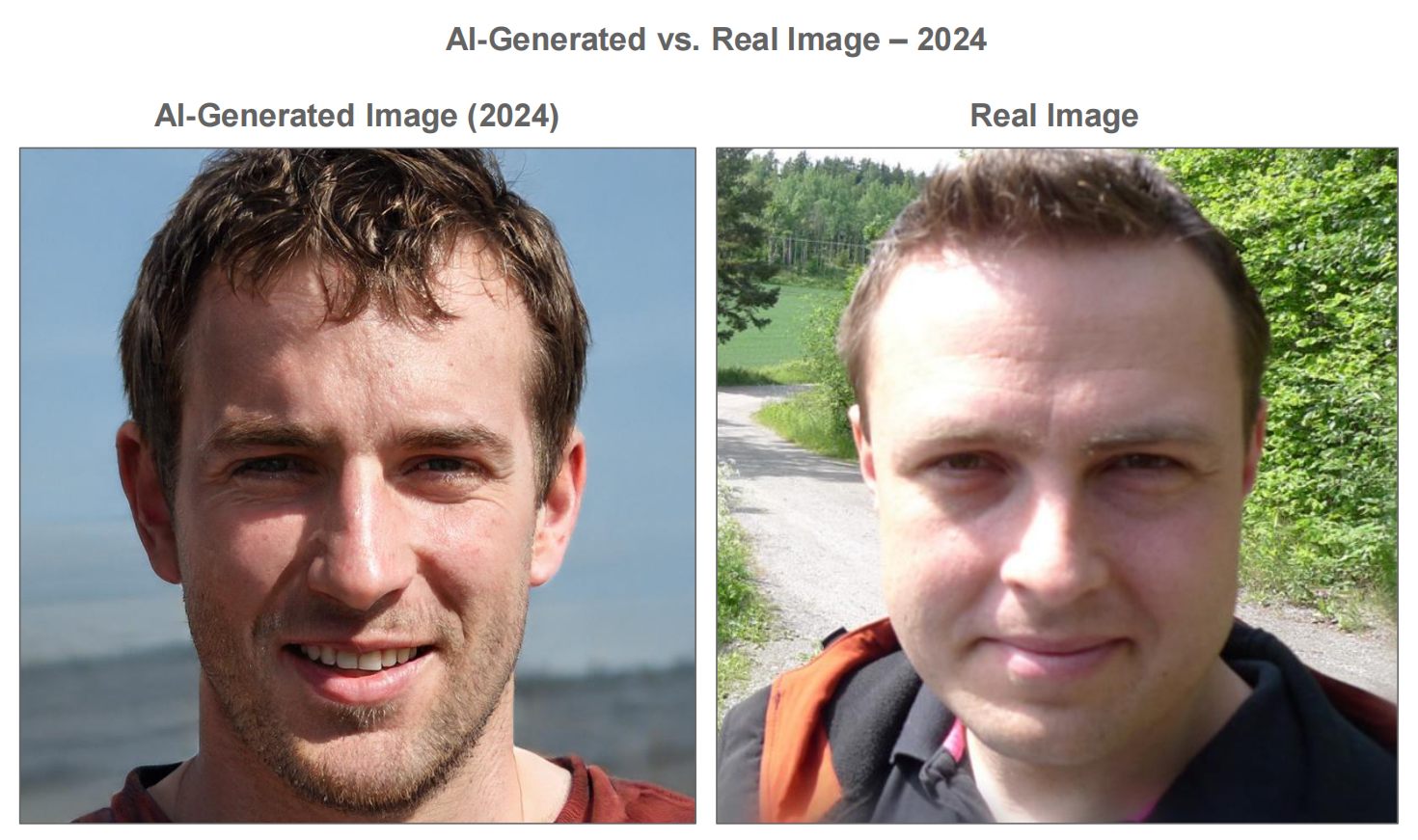

Faces 'too real to be fake'

AI's ability to generate hyper-realistic human faces is also rapidly advancing. The New York Times published an interactive quiz in 2024, inviting readers to test their ability to distinguish AI-generated faces from photographs of real people. The featured AI images were created using StyleGAN2, a powerful face-generation model. By placing an AI-generated face side by side with a genuine photo, the exercise underscored just how difficult it can be for the average viewer to tell the difference.

AI-generated face (L) created with StyleGAN2 compared to a real image (R), from The New York Times interactive quiz. /"Trends – Artificial Intelligence report," p45

AI fakes we've caught so far

As synthetic content continues to evolve, we have been monitoring and verifying AI-generated false content actively circulating online, from viral videos to fake images. In particular, we have seen frequent use of AI generation in political content, election campaigns and disaster coverage. Here are some of the AI fakes we have investigated:

1. Elections: AI-generated deception targeting voters

AI manipulation targeting elections emerged in 2023 during the Republican primary race. In June 2023, we verified that a video shared by Ron DeSantis's campaign included AI-generated images of Trump embracing Anthony Fauci. Later in the campaign, we examined claims that Kamala Harris's rally images were AI-manipulated. Our verification combined the use of AI detection tools and cross-referencing with live broadcast footage, confirming the images were authentic.

2. Politics: AI-generated disinformation targeting sensitive issues

We have verified multiple AI-generated political fakes in recent months. In March 2025, we examined a widely shared image falsely showing European leaders removing their jackets to support Ukraine. Through source verification, reverse image search and artifact analysis, we confirmed the image had been digitally altered.

In May, we debunked a manipulated TikTok video falsely portraying Donald Trump praising the Pakistan Air Force. Our verification process included analyzing lip-sync mismatches, checking official records and news reports, and using AI detection tools, confirming that both the speech and accompanying visuals were fabricated.

The cover of Fact Hunter's video on AI-manipulated TikTok video falsely portraying Donald Trump praising Pakistan's Air Force. /Fact Hunter

3. Natural disasters: synthetic catastrophes misleading the public

We have also uncovered numerous AI-generated disaster videos circulating online. After the March 28 Myanmar earthquake, we verified that viral videos showing massive destruction and a water cloud in Bangkok were synthetic. Our verification combined reverse image search, visual red flag analysis and source tracing, linking the content to AI video accounts.

In May, we debunked a viral video misrepresenting Israeli wildfires. Through visual analysis, we identified distorted license plates, static flame effects and an AI-generation confidence score of 98 percent. We traced the video's origin to an AI art account, confirming it was not authentic footage of the fires.

The cover of Fact Hunter's video on the AI-generated video falsely claiming to show damage from Myanmar's March 28 earthquake. /Fact Hunter

We remain committed to fact-checking such content and helping the public navigate this new wave of synthetic misinformation. For more, visit Fact Hunter.

A call for stronger media literacy

As AI-generated content becomes increasingly sophisticated, appearances can no longer be trusted at face value. Texts, images, and even human faces can now be convincingly fabricated by machines. Developing strong media literacy, questioning what we see, seeking independent verification and understanding how AI tools operate are essential to navigating this new reality.

User Center

User Center My Training Class

My Training Class Feedback

Feedback

Comments

Something to say?

Log in or Sign up for free